Practical PowerShell Scripting for DevOps - Part 3

Practical Scripting Challenges and Solutions for DevOps with PowerShell

Table of Contents

Hi, I’m back with you with Part 3 of this series. On this part, I will will show you how we can run loops in parallel for operations that doesn’t have any racing conditions or dependencies on each other. This is a fairly new feature introduced with PowerShell 7.1+ and it’s most useful to speed up your scripts. As this is a continuation of a series I highly recommend reading Part-1 and Part-2 first.

As I like to combine different concepts together, I will also combine the parallel loop approach with “Jobs” to show you how to make your scripts “Asynchronous”. Combined, these concepts can be reused in various scenarios to help you write smarter and faster scripts.

As we are looking for performance improvements in terms of execution time, I’ll also include a method you can use to measure and display execution times of your powershell scripts.

Problem

Hey fellow DevOps Engineer! The last script improvement was magnificent, no more false positive failures with the retry mechanism you’ve implemented. However we now have another problem! As we’ve introduced a retry mechanism into our HttpTest function, the script started to take a lot of time execute as each website is tested sequentially. The impact is felt more as our company grew further and now we have more endpoints we wish to perform these tests on.

1- We don’t care in which order we got our test results as long as we have the results as fast as possible.

2- The worker infrastructure we are running these tests on are powerful enough to run 50 tests at a time.

3- We wish to re-use helper function “New-HttpTestResult” as is or with little modification as possible.

4- Can you please add another output at the end of the script to display the Total duration of the full script execution, this will help us confirm the performance improvements.

What Success Looks like for this Challenge

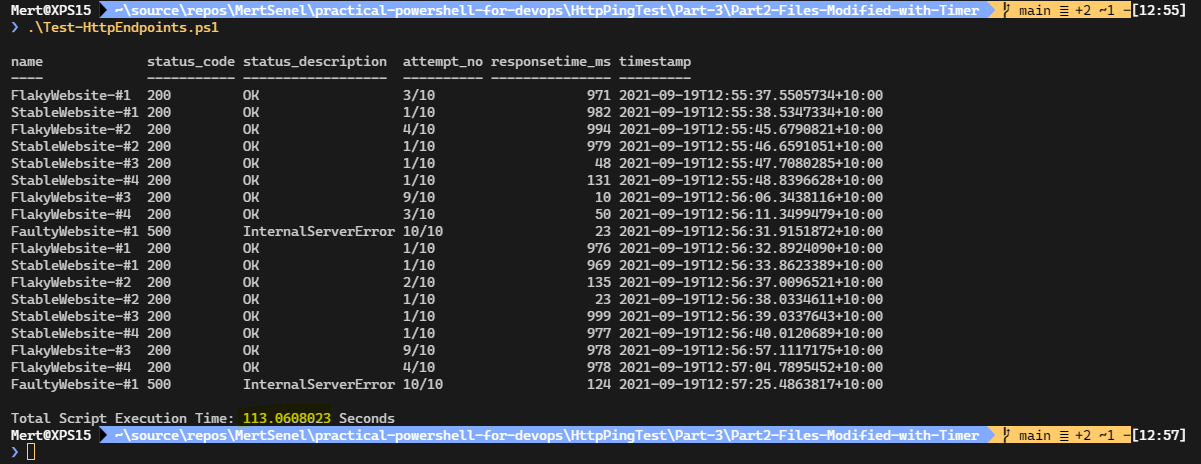

I’ve quickly added the Timer feature to our Part-2 Version of the script which doesn’t have our new and improved parallel loop.

To fully appreciate the performance gains we will achieve from our improvement, I’ve also doubled our Test.json file to Test 18 endpoints.

As it can be seen from the screenshot, the total execution time is 113 seconds, it’s quite a long time to wait, but this version of the script’s loop is working in sequence and is synchronous by design, it has to wait for test at hand to go to the next one.

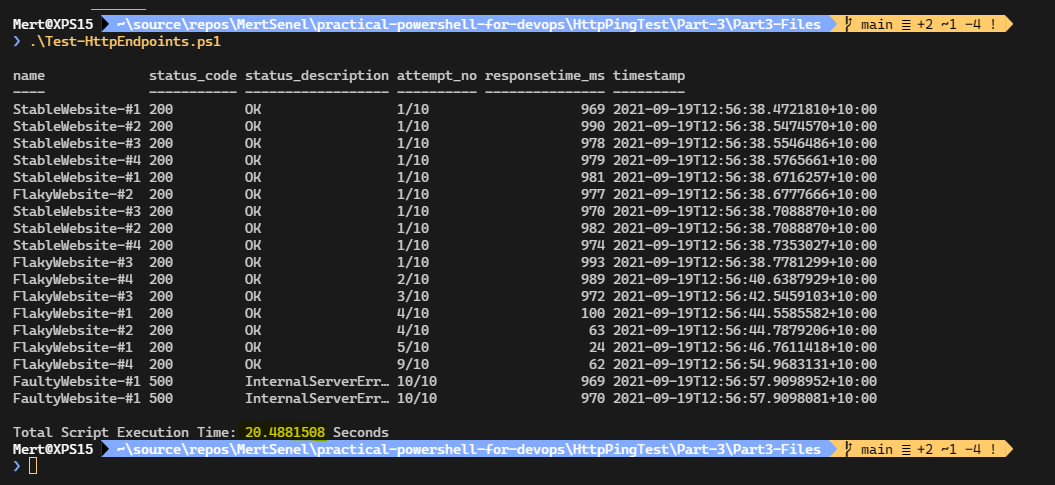

Here is the improved version:

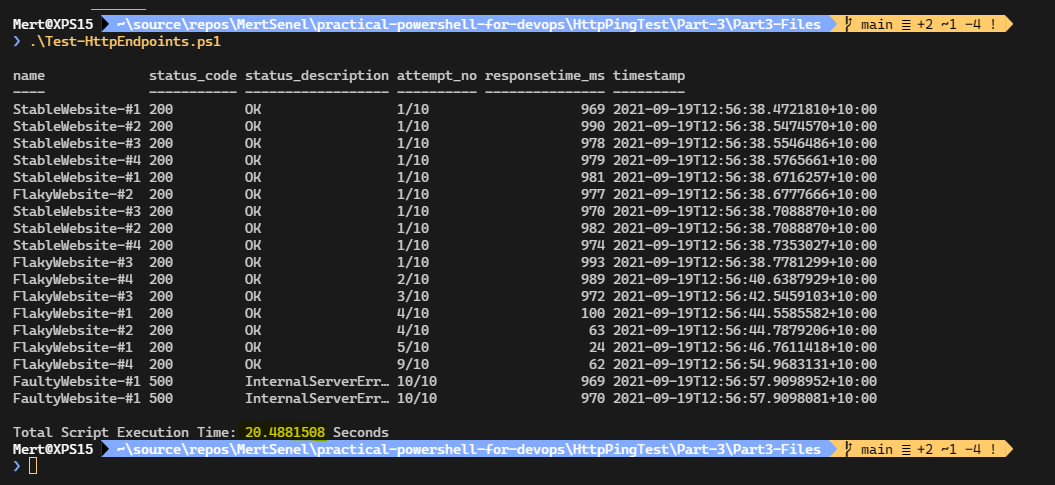

First of all, same amount of endpoints only took 20 seconds to test with this version of the script. Another thing to notice is that, the order of results are not same as the order of our input, but instead it’s in the order of test response return time.

As you can see, the tests which required more retries, hence took more time are displayed further down the list, whereas endpoints which returned a successfully result on first attempts are at the top.

Back to our original point, new version have improved the script’s execution duration from 113 seconds to 20 seconds, it’s an x5 times improvement. This will scale even further if the input list gets bigger.

Some Pointers For Writing Your Own Solution

This can be your starting point Part-2 Files of my sample solution (With Timer added) Part-2 Files

Some Test Endpoints You Can Use

As this part I’ll provide the starting point, you will already have the curated test endpoints json file readily available. It has multiple endpoints for simulating different scenarios. For more information please read part 1

The Main Learning From the Challenge

We are using new parallel feature of the ForEach-Object cmdlet and Thread Jobs which are another feature of the new PowerShell 7.

So I recommend reading and understanding these concepts from the documentation.

Finally, parallel foreach loops doesn’t support running custom functions defined outside of the loop, as each iteration of the loop is a new runspace. Hence we need to define our helper function in each of the loop iteration. Currently, cmdlet doesn’t supports this natively but there is a very easy workaround I’ve found on stackoverflow and sharing with you so you don’t have to look for it.

The main idea is to, import your function a plain string, and then actually creating the function definition inside the loop iteration via using this string as source. Then you would be able to use your custom function(s).

I’ve used Stopwatch class to measure the script execution time. Stopwatch has been created via using the built-in class of .NET Core. PowerShell can create objects from these classes via a syntax called Type Accelerator.

Links:

ForEach-Object

about_Thread_Jobs

How_to_Run_Custom_Function_in_Parallel_ForEach_Loop

Tasks You Need To Perform In Your Script

1- Convert the foreach loop we had in Part-2 script to it’s ForEach-Object -Parallel implementation.

2- Be able to reference and use your custom New-HttpTestResult function inside the parallel foreach-object loop.

3- Make sure you are displaying results as they come, rather than waiting for all tests to finish executing. You will need to implement Powershell Thread Jobs to achieve this behaviour.

4- Add a mechanism to measure and display the total execution time of the script

Final Tips

From this point below you will see my sample solution. Depending on your level and learning style, either take a look before or after you gave it your shot.

My Sample Solution

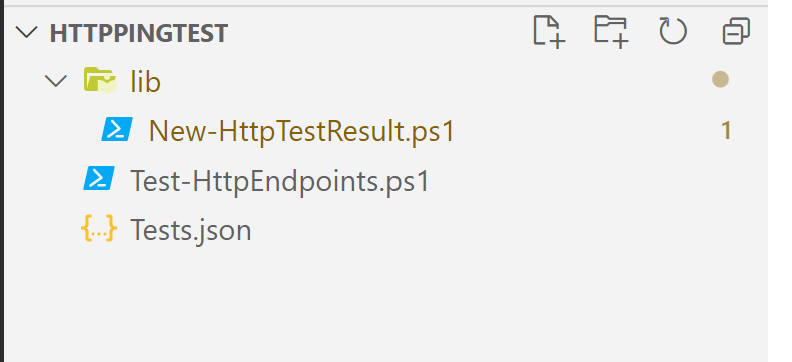

Folder Structure

Files for my Sample Solution

Tests.json

This is the list of endpoints I would like to test, stored in JSON format which can be produced and consumed with a variety of modern languages. What we have here is an array of objects that has name and url keys for us to perform and label the tests.

As this file has been covered in last two parts I won’t be providing more explanation.

Our Helper Function, create this file under path ./lib/New-HttpTestResult.ps1

As this file has been covered in last two parts I won’t be providing more explanation.

Test-HttpEndpoints.ps1

Our Main script, and all the changes we’ve performed are on this script for Part-3 of the series.

I’ve introduced a Stopwatch object. Stopwatch has been created via using the built-in class of .NET Core. StopWatch Class PowerShell can create objects from these classes via a syntax called Type Accelerator.

Our foreach loop, now converted into a ForEach-Object Parallel version and we are running each operation as a Job. Hence, now the result of our loop is not a collection of test results, but instead a collection of background jobs that have been started in parallel.

Now that we have the collection of Job created, we can wait for the results of these jobs. The results of these jobs, will be result of a HttpTestResult.

From there on, we print the test results as each job is finish running and gets completed.

[CmdletBinding()]

param (

[Parameter(ValueFromPipeline = $true)]

[string]

$TestsFilePath = '.\Tests.json'

)

#Lets Create a StopWatch Object.

$Stopwatch = [System.Diagnostics.Stopwatch]::new()

# Now Start the timer.

$Stopwatch.Start()

# Convert JSON Config Files String value to a PowerShell Object

$TestsObj = Get-Content -Path $TestsFilePath | ConvertFrom-Json

# Import the Tester Function

. ./lib/New-HttpTestResult.ps1

$funcDef = ${function:New-HttpTestResult}.ToString()

$jobs = $TestsObj | ForEach-Object -Parallel {

${function:New-HttpTestResult} = $using:funcDef

New-HttpTestResult -TestArgs $_

} -AsJob -ThrottleLimit 50

$jobs | Receive-Job -Wait | Format-Table

# Now Stop the timer.

$Stopwatch.Stop()

$TestDuration = $Stopwatch.Elapsed.TotalSeconds

Write-Host "Total Script Execution Time: $($TestDuration) Seconds"

Sample Runs

Conclusion

The biggest benefit of Automation is that it has the ability to scale. However, while writing our scripts, we also need to consider the input it will take and the nature of operation we are performing.

Running loops in parallel, and using background jobs instead of waiting the execution of each operation is a way to speed up automation workflows. Imagine you are responsible for writing an automation to shutdown 10s of VMs on Friday evening. Would it really make sense to write a script that shuts them down, one at a time? What if that number was around 1000s, how would your automation scale?

Where possible, I tend to now leverage parallel loops to speed up execution and avoid unnecessary waiting in my scripts. Of course not each scenario can work with this type of loop, but deciding when you need it is also on you as the engineer. It’s another tool in our toolkit, it’s now up to us to decide where and how to use it.